Under the EU’s Digital Services Act (DSA), very large online platforms have to assess the risks their core services pose to individuals and society.

Have they become powerful launchpads for trolls and bullies to spread disinformation and hate? What impact do they have on public health, on the quality of public debate, on electoral processes? And how do they plan to mitigate these risks?

One of the key design-layers of big tech’s platforms which systematically risk causing harm, are their recommender systems. The role of these algorithmic machines is to rank, filter and target content to individual users. There is nothing bad about filtering and targeting content per se. In fact, without such algorithmic filters, we would be entirely lost on the internet. But dominant platforms chose to prioritise user engagement over safety, and designed their recommender systems to keep us clicking and scrolling as long as possible.

There’s a growing body of research showing that engagement-driven recommendations push people towards political extremes by fuelling outrage, drive social media addiction, amplify polarising content, and risk vulnerable people’s mental health.

My colleagues and I founded the Panoptykon Foundation in Poland 15 years ago to defend humans from the threats of rapidly-changing technologies and growing surveillance. Recommender systems are one of such threats, which has been neglected by policy makers until the situation became critical.

It’s clear that for the DSA to neutralise some of the harms related to the social media business model, then recommender systems need urgent and special attention from the European Commission and national regulators.

At Panoptykon we know that to make this happen we need to pool expertise and campaigning power from across the network supported by Civitates. This is why in 2022 I came up with the idea of a Recommender Systems Task Force – an informal platform to coordinate our projects and share intel from Brussels. Initially it was hard to transform this idea into an operational structure, capable of delivering concrete products . But in 2023 everything came together, from targeted research to campaigning, and as the group we had leverage in talking to the European Commission.

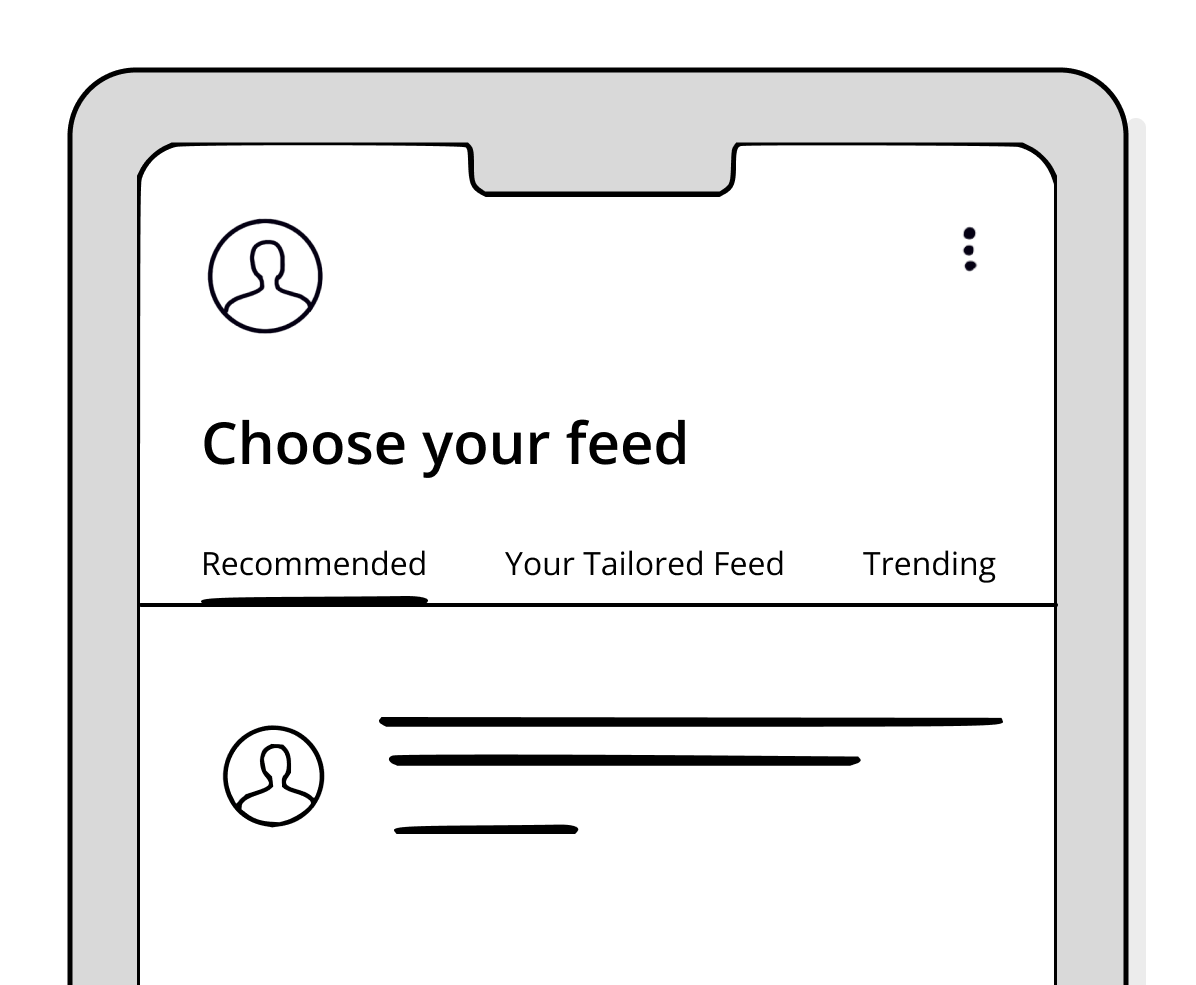

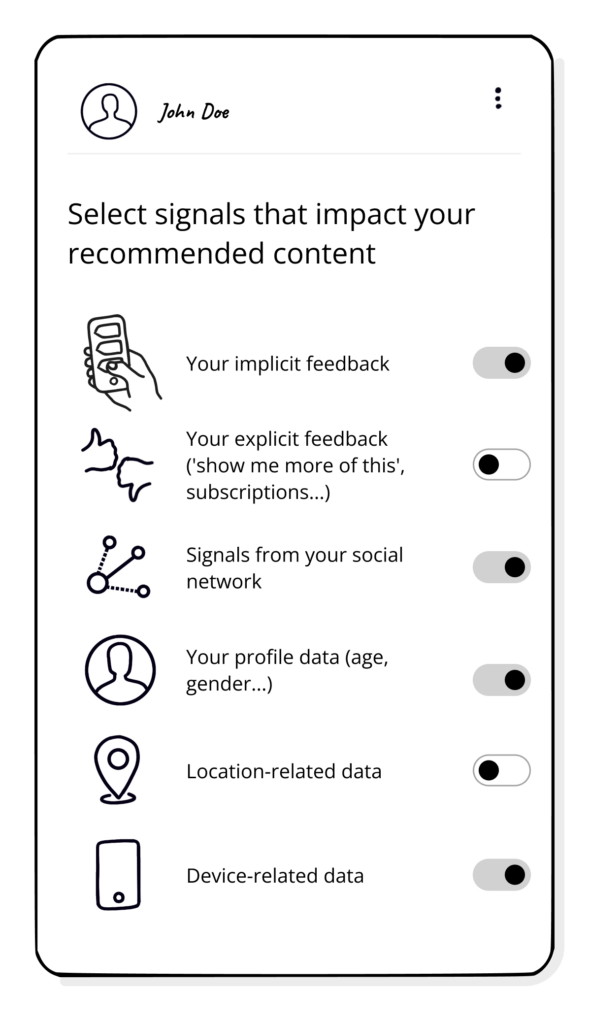

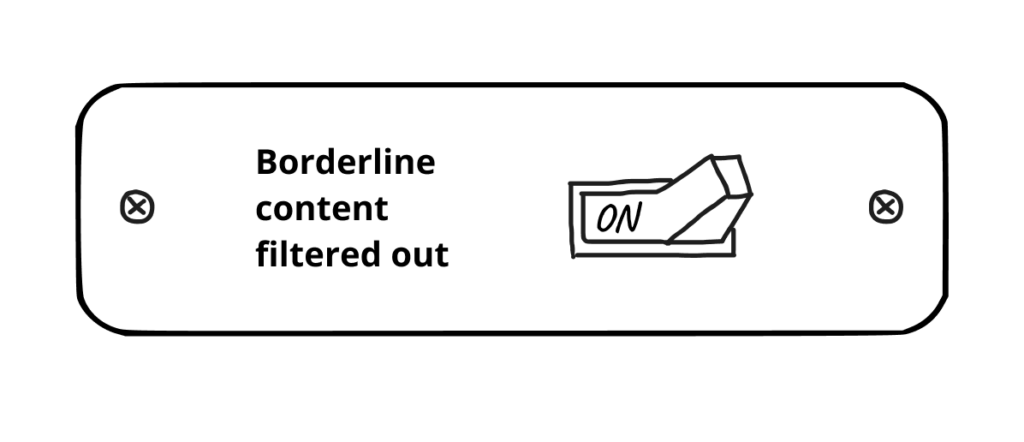

In our research, we first named design features of large platform recommender systems that prove harmful. And then prototyped alternative designs to prove that these powerful algorithmic machines can be made safer. We developed a novel approach to what used to be called “user settings” – but failed to give users’ meaningful control over their feeds. We believe that authentic personalisation in recommender systems can be achieved without tracking and profiling users’ behaviour. This technology can be designed to serve humans, instead of manipulating, enraging and addicting them. But for that the EU will have to challenge big tech’s business paradigm. We are not talking about cosmetic changes here.

Part of the challenge we are facing in this battle – for a better, healthier online public sphere – is denial. This is the attitude of the ordinary internet user, who might experience mental health issues, problems in relationships and compulsive use of social media, or be lured towards extremism, but still accept that “it is how it is”. Nobody ever told them that their harm comes from intentionally bad design and a different kind of online experience is possible. This is why we will continue to push the message: with the DSA in place, healthier and safer recommender systems are within our reach. We just need regulators to do their job and tech companies to comply.

I think the importance of our work is that we do no not give up even though these battles are extremely complicated and very long term.